Overview

之前的文章,我们记录了如何用TensorFlow 2.0中的Keras模块实现DeepFM算法,TensorFlow 2.0实战DeepFM。本文继续用TensorFlow 2.0来实现另一个常见的深度学习推荐算法Deep&Cross。

1. 加载并处理数据

依然沿用之前的1,000,000条criteo数据。

import numpy as np

import pandas as pd

from sklearn.preprocessing import LabelEncoder, MinMaxScaler

import tensorflow as tf

from tensorflow.keras import regularizers

from tensorflow.keras.layers import *

from tensorflow.keras.models import Model

from tensorflow.keras.utils import plot_model

import tensorflow.keras.backend as K

from tensorflow.keras.callbacks import TensorBoard

import matplotlib.pyplot as plt

%matplotlib inline

data = pd.read_csv('criteo/criteo_sampled_data.csv', sep='\t', header=None)

data.head()

label = ['label']

dense_features = ['I' + str(i) for i in range(1, 14)]

sparse_features = ['C' + str(i) for i in range(1, 27)]

name_list = label + dense_features + sparse_features

data.columns = name_list

处理连续型特征:

# 数值型特征空值填0

data[dense_features] = data[dense_features].fillna(0)

# 数值型特征归一化

scaler = MinMaxScaler(feature_range=(0, 1))

data[dense_features] = scaler.fit_transform(data[dense_features])

处理稀疏类别特征:

data[sparse_features] = data[sparse_features].fillna("-1")

for feat in sparse_features:

lbe = LabelEncoder()

data[feat] = lbe.fit_transform(data[feat])

2. 建模训练

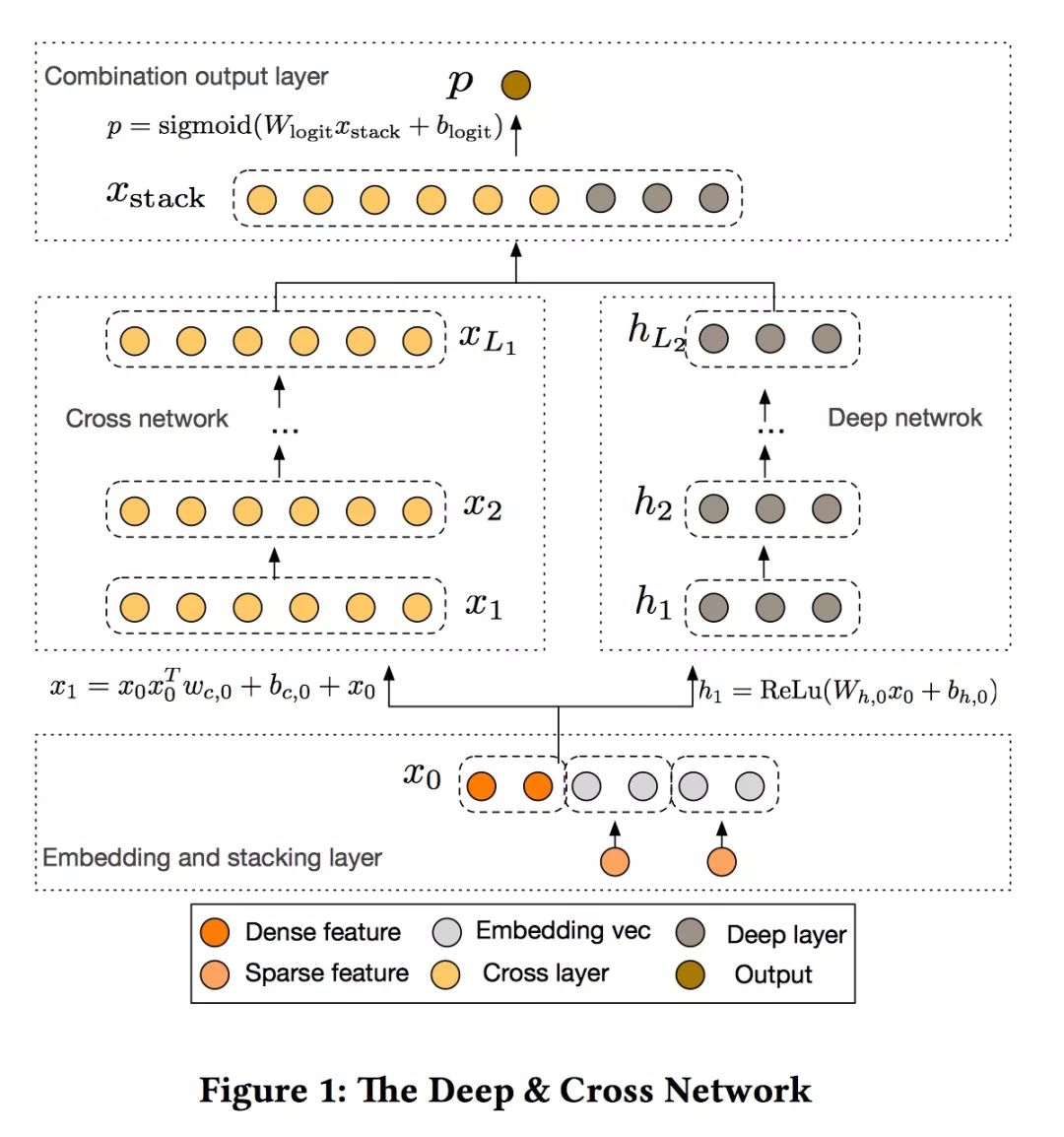

我们先来看看DCN算法的架构图:

2.1 输入层

# 稠密特征

dense_inputs = []

for fea in dense_features:

_input = Input([1], name=fea)

dense_inputs.append(_input)

dense_inputs

输出13个稠密特征的张量:

[<tf.Tensor 'I1:0' shape=(None, 1) dtype=float32>,

<tf.Tensor 'I2:0' shape=(None, 1) dtype=float32>,

<tf.Tensor 'I3:0' shape=(None, 1) dtype=float32>,

<tf.Tensor 'I4:0' shape=(None, 1) dtype=float32>,

<tf.Tensor 'I5:0' shape=(None, 1) dtype=float32>,

<tf.Tensor 'I6:0' shape=(None, 1) dtype=float32>,

<tf.Tensor 'I7:0' shape=(None, 1) dtype=float32>,

<tf.Tensor 'I8:0' shape=(None, 1) dtype=float32>,

<tf.Tensor 'I9:0' shape=(None, 1) dtype=float32>,

<tf.Tensor 'I10:0' shape=(None, 1) dtype=float32>,

<tf.Tensor 'I11:0' shape=(None, 1) dtype=float32>,

<tf.Tensor 'I12:0' shape=(None, 1) dtype=float32>,

<tf.Tensor 'I13:0' shape=(None, 1) dtype=float32>]

稠密特征是不用做Embedding的,我们直接连接起来:

concat_dense_inputs = Concatenate(axis=1)(dense_inputs)

然后处理稀疏特征,对其做Embedding,我们假设每个特征映射成为8维的向量:

sparse_inputs = []

for fea in sparse_features:

_input = Input([1], name=fea)

sparse_inputs.append(_input)

k = 8

sparse_kd_embed = []

for _input in sparse_inputs:

f = _input.name.split(':')[0]

voc_size = data[f].nunique()

_embed = Flatten()(Embedding(voc_size+1, k, embeddings_regularizer=regularizers.l2(0.7))(_input))

sparse_kd_embed.append(_embed)

sparse_kd_embed

可以看到Embedding之后的结果:

[<tf.Tensor 'flatten/Identity:0' shape=(None, 8) dtype=float32>,

<tf.Tensor 'flatten_1/Identity:0' shape=(None, 8) dtype=float32>,

<tf.Tensor 'flatten_2/Identity:0' shape=(None, 8) dtype=float32>,

<tf.Tensor 'flatten_3/Identity:0' shape=(None, 8) dtype=float32>,

<tf.Tensor 'flatten_4/Identity:0' shape=(None, 8) dtype=float32>,

<tf.Tensor 'flatten_5/Identity:0' shape=(None, 8) dtype=float32>,

<tf.Tensor 'flatten_6/Identity:0' shape=(None, 8) dtype=float32>,

<tf.Tensor 'flatten_7/Identity:0' shape=(None, 8) dtype=float32>,

<tf.Tensor 'flatten_8/Identity:0' shape=(None, 8) dtype=float32>,

<tf.Tensor 'flatten_9/Identity:0' shape=(None, 8) dtype=float32>,

<tf.Tensor 'flatten_10/Identity:0' shape=(None, 8) dtype=float32>,

<tf.Tensor 'flatten_11/Identity:0' shape=(None, 8) dtype=float32>,

<tf.Tensor 'flatten_12/Identity:0' shape=(None, 8) dtype=float32>,

<tf.Tensor 'flatten_13/Identity:0' shape=(None, 8) dtype=float32>,

<tf.Tensor 'flatten_14/Identity:0' shape=(None, 8) dtype=float32>,

<tf.Tensor 'flatten_15/Identity:0' shape=(None, 8) dtype=float32>,

<tf.Tensor 'flatten_16/Identity:0' shape=(None, 8) dtype=float32>,

<tf.Tensor 'flatten_17/Identity:0' shape=(None, 8) dtype=float32>,

<tf.Tensor 'flatten_18/Identity:0' shape=(None, 8) dtype=float32>,

<tf.Tensor 'flatten_19/Identity:0' shape=(None, 8) dtype=float32>,

<tf.Tensor 'flatten_20/Identity:0' shape=(None, 8) dtype=float32>,

<tf.Tensor 'flatten_21/Identity:0' shape=(None, 8) dtype=float32>,

<tf.Tensor 'flatten_22/Identity:0' shape=(None, 8) dtype=float32>,

<tf.Tensor 'flatten_23/Identity:0' shape=(None, 8) dtype=float32>,

<tf.Tensor 'flatten_24/Identity:0' shape=(None, 8) dtype=float32>,

<tf.Tensor 'flatten_25/Identity:0' shape=(None, 8) dtype=float32>]

连接起来稀疏特征映射结果:

concat_sparse_inputs = Concatenate(axis=1)(sparse_kd_embed)

最后,把稠密特征和稀疏特征两部分结果合并,成为整个Deep&Cross算法的Embedding层和连接层,这部分是Cross网络和Deep网络两部分共用的,这一点和DeepFM算法有明显的区别。

embed_inputs = Concatenate(axis=1)([concat_sparse_inputs, concat_dense_inputs])

2.2 Cross网络

先来一个Cross层的构建函数:

def cross_layer(x0, xl):

"""

实现一层cross layer

@param x0: 前面得到的embedding层

@param xl: 第L层的输出结果,注意L和1的区别

"""

# 获取xL层的embedding size

embed_dim = xl.shape[-1]

# 初始化当前层的W和b

w = tf.Variable(tf.random.truncated_normal(shape=(embed_dim,), stddev=0.01))

b = tf.Variable(tf.zeros(shape=(embed_dim,)))

# 计算feature crossing

x_lw = tf.tensordot(tf.reshape(xl, [-1, 1, embed_dim]), w, axes=1)

cross = x0 * x_lw

return cross + b + xl

这里我们需要知道,在算$x_{0}x_{l}^{T}W_{l}$时一定要先算$x_{l}^{T}W_{l}$,这样可以节约内存。

然后逐层构建Cross层:

def build_cross_layer(x0, num_layer=3):

"""

构建多层cross layer

@param x0: 前面得到的embedding层

@param num_layers: cross net的层数

"""

# 初始化xl为x0

xl = x0

# 构建多层cross net

for i in range(num_layer):

xl = cross_layer(x0, xl)

return xl

开始构建Cross层:

cross_layer_output = build_cross_layer(embed_inputs, 3)

2.3 Deep网络

这一步,会和Cross部分共用前面得到的embedding结果。

fc_layer = Dropout(0.5)(Dense(128, activation='relu')(embed_inputs)) # Deep层和Cross层共用embedding结果

fc_layer = Dropout(0.3)(Dense(128, activation='relu')(fc_layer))

fc_layer_output = Dropout(0.1)(Dense(128, activation='relu')(fc_layer))

2.4 输出层

我们将Deep部分和Cross部分结果Stacking一下:

stack_layer = Concatenate()([cross_layer_output, fc_layer_output])

output_layer = Dense(1, activation='sigmoid', use_bias=True)(stack_layer)

2.5 模型编译

建模查看模型结构:

model = Model(dense_inputs + sparse_inputs, output_layer)

plot_model(model, "dcn.png")

model.summary()

编译模型:

model.compile(optimizer="adam",

loss="binary_crossentropy",

metrics=["binary_crossentropy", metrics.AUC(name='auc')])

2.6 训练模型

tbCallBack = TensorBoard(log_dir='./logs',

histogram_freq=0,

write_graph=True,

# write_grads=True,

write_images=True,

embeddings_freq=0,

embeddings_layer_names=None,

embeddings_metadata=None)

train_data = data.loc[:800000-1]

valid_data = data.loc[800000:]

train_dense_x = [train_data[f].values for f in dense_features]

train_sparse_x = [train_data[f].values for f in sparse_features]

train_label = [train_data['label'].values]

val_dense_x = [valid_data[f].values for f in dense_features]

val_sparse_x = [valid_data[f].values for f in sparse_features]

val_label = [valid_data['label'].values]

model.fit(train_dense_x+train_sparse_x,

train_label, epochs=5, batch_size=128,

validation_data=(val_dense_x+val_sparse_x, val_label),

callbacks=[tbCallBack]

)

训练结果:

Epoch 1/5

6250/6250 [==============================] - 309s 49ms/step - loss: 14.1232 - binary_crossentropy: 0.5130 - auc: 0.7139 - val_loss: 0.6548 - val_binary_crossentropy: 0.5039 - val_auc: 0.7356

Epoch 2/5

6250/6250 [==============================] - 307s 49ms/step - loss: 0.6697 - binary_crossentropy: 0.5056 - auc: 0.7259 - val_loss: 0.6767 - val_binary_crossentropy: 0.4949 - val_auc: 0.7364

Epoch 3/5

6250/6250 [==============================] - 307s 49ms/step - loss: 0.6805 - binary_crossentropy: 0.5036 - auc: 0.7291 - val_loss: 0.6862 - val_binary_crossentropy: 0.4947 - val_auc: 0.7371

Epoch 4/5

6250/6250 [==============================] - 308s 49ms/step - loss: 0.6845 - binary_crossentropy: 0.5025 - auc: 0.7308 - val_loss: 0.6864 - val_binary_crossentropy: 0.4962 - val_auc: 0.7392

Epoch 5/5

6250/6250 [==============================] - 307s 49ms/step - loss: 0.6888 - binary_crossentropy: 0.5018 - auc: 0.7317 - val_loss: 0.6823 - val_binary_crossentropy: 0.4942 - val_auc: 0.7378

至此,Deep&Cross算法已经用TensorFlow 2.0实现。总体来说,这个算法实现起来,比DeepFM要简单一些。

本文主要参考了以下文章:

CTR模型代码实战

CTR预估模型:DeepFM/Deep&Cross/xDeepFM/AutoInt代码实战与讲解